Rethinking Event-Driven Systems: A Retrospective

October 24, 2025Event-driven architectures are everywhere on AWS, but the way teams approach them has changed a lot over the years. When I first started working with these patterns, EventBridge didn’t exist, Pipes weren’t a thing, and many of the “obvious” best practices weren’t quite so obvious.

This article isn’t a comprehensive guide to all the eventing options on AWS. It’s a look back at how I approached event-driven communication in the past, what worked, what didn’t, and how my thinking has evolved with the tools available today. Think of it as a field report, not a framework.

How I Think About Event-Driven Systems

I’ve always approached event-driven systems through the lens of state machines. An event is just a piece of data that may trigger an action on the receiver; that action might, in turn, emit new events. The important loop is trigger → transition → possible emission.

When I explain this to people who don’t come from a distributed systems background, I usually simplify it to:

“It’s like a function call where you don’t wait for the reply.” And if the receiver has to send back an acknowledgment before the producer can continue, you’re effectively implementing synchronous processing on top of asynchronous messaging.

From this model, it’s natural to distinguish between messaging and streaming. In messaging, each event is a trigger. Once it’s processed, the message itself can be discarded. In streaming, events are treated as data points in a continuous flow - usually feeding into a data lake, warehouse, or analytics system.

The distinction often lives on the consumer side: the same business event can be a trigger for one system (e.g. sending a confirmation email) and a data point for another (e.g. BI aggregation). Volume can be a hint too: higher throughput and analytic use cases push you toward streaming, whereas operational workflows usually lean on messaging.

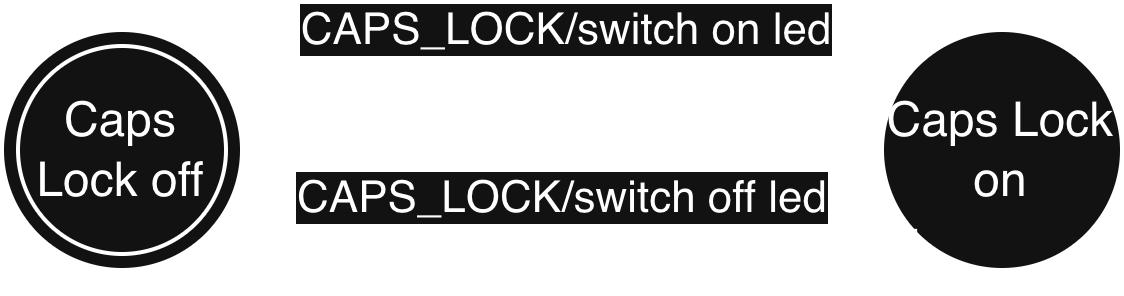

In the simple example state machine below, a keyboard caps lock function is sketched out. We start in the caps lock “off” state. Pressing the caps lock key triggers a transition to the “on” state, emitting a “switch on led” event. Pressing it again triggers a transition back to “off,” emitting a “switch off led” event.

When I design a new system, this mental model directly informs my first questions:

- Fan-out model: Point-to-point or pub/sub? If unclear, start with pub/sub - it covers both.

- Ordering: Is strict ordering actually needed? If yes, the design space narrows quickly.

- Delivery semantics: At-least-once or at-most-once?

- DLQs: Should we have them, and how do they interact with delivery semantics and ordering?

Most real-world architectures end up mixing both messaging and streaming: a single business event is emitted once, then interpreted differently by different consumers.

This way of thinking - focusing on triggers, delivery semantics, and the roles of producers and consumers - also shaped how we approached system design at the time. The architectural choices we made weren’t random; they reflected this mental model and the tooling landscape back then.

Evolving from Stream-Centric to Message-Centric Architectures

At the time, the obvious choice for event distribution was Kinesis. It offered strict ordering guarantees via partition keys, and using it for asynchronous communication seemed like a safe, future-proof decision. Each message type had its own dedicated Kinesis stream, and this pattern was applied consistently across the system.

Over time, however, operational reality caught up with the original design. Message volume stayed low, ordering issues never really materialized, and most consumers didn’t need replay capabilities or long retention - they just needed to react to individual events. The streaming backbone was technically sound but heavier than necessary for the actual semantics in play.

The architecture gradually evolved toward a message-centric design built around SNS topics with SQS subscribers. Each message type continued to have its own dedicated channel, but the underlying transport changed: producers published to SNS topics, operational consumers subscribed via standard or FIFO queues, and analytical consumers used Firehose to persist the same events into a data lake.

This shift brought several benefits:

- Operational simplicity: No shard scaling, and each consumer managed its own retries and DLQs.

- Uniformity: Producers used a straightforward publish model; consumers owned their inboxes.

- Better semantic fit: Most systems were idempotent or could be made so, reducing the need for strict FIFO queues.

At the time, EventBridge and Pipes didn’t exist, and filtering logic was intentionally simple. Looking back, I’d probably consider Pipes to avoid small glue Lambdas, or EventBridge for richer routing in larger ecosystems. But for the scale and requirements then, per-message-type SNS topics with targeted subscriptions were exactly the right level of complexity.

Opinions & Anti-Patterns

Over time, working with these patterns revealed a few recurring misunderstandings and design habits - some subtle, some less so - that shaped how I think about event-driven systems today.

Most teams intuitively understand that distributed messaging isn’t just a function call. What’s less obvious is why messages can arrive out of order and why there’s rarely exactly-once delivery. These gaps in understanding tend to manifest in two ways I’ve seen repeatedly:

- Unnecessary FIFO queues. Teams often enable FIFO “just in case.” It feels safer, but it comes with throughput and latency costs, and in most cases, the ordering guarantees aren’t actually required.

- Duplicate and out-of-order fallout. The most common symptom is a 3 a.m. PagerDuty alert because a single bad message in a batch blocked progress. These incidents are rarely exotic - they’re almost always caused by simple retry and acknowledgment issues.

Illustration of me, delighted about a PagerDuty alert at 3 a.m.

Illustration of me, delighted about a PagerDuty alert at 3 a.m.

My response to these problems is pragmatic and strict:

- Make DLQs mandatory. Every consumer must have its own DLQ policy. A bad message shouldn’t take the system hostage, and DLQs are the pressure valve that prevents it.

- Require per-message acknowledgements. If a consumer crashes mid-batch, already-processed messages shouldn’t be re-executed just because the batch failed.

These are consumer responsibilities. Producers and consumers must agree on delivery semantics, including both delivery guarantees (at-least-once vs. at-most-once) and ordering. Once that contract exists, each side must uphold it. Over time, this discipline naturally pushes systems toward idempotency by default, which reduces FIFO misuse and limits the blast radius of retries.

The last point I’d stress is about routing complexity. When routing involves custom Lambdas or hand-rolled pipelines, engineers naturally hesitate before adding new routes - it’s work. But with primitives like SNS subscriptions, EventBridge rules, and Pipes, routing becomes almost trivial. That’s powerful, but it also makes it easy to create invisible, tangled event graphs. My rule is simple: keep routing discoverable and intentional, with clear contracts and minimal surprise subscribers.

Closing Thoughts

Looking back, what stands out to me isn’t any single technology choice, but how much of the architecture was ultimately shaped by semantics rather than tools. The tooling changed over time - first Kinesis, then SNS and SQS, and today EventBridge and Pipes - but the fundamental questions have always been the same:

- What’s the fan-out model?

- Do we need ordering?

- Which delivery semantics are we committing to?

- How do we handle failure?

- How much throughput do we need?

- Do we need high availability or horizontal scalability?

These questions shape the system long before you pick a service.

Since then, the AWS toolbox has expanded significantly. EventBridge, Pipes, schema registries, and richer routing capabilities now make patterns possible that would have been cumbersome years ago. They remove a lot of the mechanical overhead, but simplicity at the tooling level doesn’t prevent complexity at the system level.

For me, the core lesson hasn’t changed: the hard part isn’t choosing the service - it’s being deliberate about the semantics. Once those are clear, the tooling usually falls into place.